-

GAMEPOD.hu

A legtöbb kérdésre (igen, talán arra is amit éppen feltenni készülsz) már jó eséllyel megtalálható a válasz valahol a topikban. Mielőtt írnál, lapozz vagy tekerj kicsit visszább, és/vagy használd bátran a keresőt a kérdésed kulcsszavaival!

Új hozzászólás Aktív témák

-

válasz

Raymond

#43561

üzenetére

Raymond

#43561

üzenetére

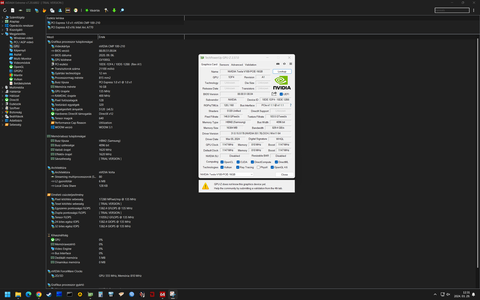

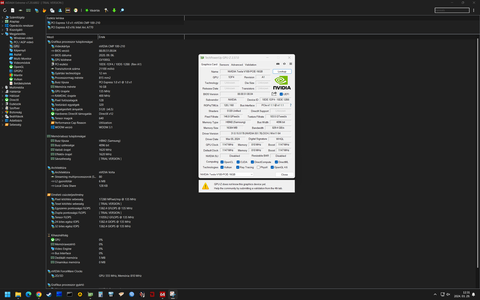

Felraktam a fooocust.

Total VRAM 16384 MB, total RAM 130776 MB

Set vram state to: NORMAL_VRAM

Always offload VRAM

Device: cuda:0 Tesla V100-PCIE-12GB : native

VAE dtype: torch.float32

Using pytorch cross attention

Refiner unloaded.

Running on local URL: http://127.0.0.1:7865

model_type EPS

UNet ADM Dimension 28161024x1024-en elég lassú.

[Fooocus] Preparing Fooocus text #1 ...

[Prompt Expansion] proharver test image video cards, dramatic color, intricate, elegant, highly detailed, extremely scientific, shining, sharp focus, innocent, fine detail, beautiful, inspired, illustrious, complex, epic, amazing composition, fancy, elite, designed, clear, crisp, polished, artistic, symmetry, rich deep colors, cinematic, light, striking, marvelous, moving, very

[Fooocus] Preparing Fooocus text #2 ...

[Prompt Expansion] proharver test image video cards, mystical surreal, highly detailed, very beautiful, dramatic light, cinematic composition, clear artistic balance, inspired color, intricate, elegant, perfect background, professional fine detail, extremely nice colors, stunning, cute, futuristic, best, creative, positive, thoughtful, vibrant, successful, pure, hopeful, whole, romantic, iconic, shiny

[Fooocus] Encoding positive #1 ...

[Fooocus Model Management] Moving model(s) has taken 1.27 seconds

[Fooocus] Encoding positive #2 ...

[Fooocus] Encoding negative #1 ...

[Fooocus] Encoding negative #2 ...

[Parameters] Denoising Strength = 1.0

[Parameters] Initial Latent shape: Image Space (1024, 1024)

Preparation time: 3.55 seconds

[Sampler] refiner_swap_method = joint

[Sampler] sigma_min = 0.0291671771556139, sigma_max = 14.614643096923828

Requested to load SDXL

Loading 1 new model

[Fooocus Model Management] Moving model(s) has taken 34.70 seconds

100%|██████████████████████████████████████████████████████████████████████████████████| 30/30 [01:05<00:00, 2.19s/it]

Requested to load AutoencoderKL

Loading 1 new model

[Fooocus Model Management] Moving model(s) has taken 1.89 seconds

Image generated with private log at: T:\AI\Fooocus\Fooocus\outputs\2024-03-29\log.html

Generating and saving time: 103.38 seconds

[Sampler] refiner_swap_method = joint

[Sampler] sigma_min = 0.0291671771556139, sigma_max = 14.614643096923828

Requested to load SDXL

Loading 1 new model

[Fooocus Model Management] Moving model(s) has taken 27.48 seconds

33%|███████████████████████████▎ | 10/30 [00:21<00:43, 2.18s/it]Annyi hogy a kártyát átraktam WDDM módba nvidia-smi-vel.

Így már Aida is látja rendesen meg a hwinfó is.

Játékra is alkalmas lenne de a pcie1x miatt nincs értelme.FP16-ot kellene tudnia pedig. Még annyi hogy alig melegszik 60 fok felett alig látom pedig nem is megy rá rendesen levegő csak ilyen 2 cm hézagba fujatok bele blowerrel. Az előző Tesla M40 már ennyinél megfőtt volna.

[ Szerkesztve ]

Vintage Story PH szervere újra fut!

-

lenox

veterán

válasz

Raymond

#43561

üzenetére

Raymond

#43561

üzenetére

Nekem ilyen:

Total VRAM 49140 MB, total RAM 130834 MB

Set vram state to: NORMAL_VRAM

Always offload VRAM

Device: cuda:0 NVIDIA RTX 6000 Ada Generation : native

VAE dtype: torch.bfloat16[Fooocus] Encoding positive #1 ...

[Fooocus Model Management] Moving model(s) has taken 0.14 seconds

[Fooocus] Encoding positive #2 ...

[Fooocus] Encoding negative #1 ...

[Fooocus] Encoding negative #2 ...

[Parameters] Denoising Strength = 1.0

[Parameters] Initial Latent shape: Image Space (1024, 1024)

Preparation time: 1.98 seconds

[Sampler] refiner_swap_method = joint

[Sampler] sigma_min = 0.0291671771556139, sigma_max = 14.614643096923828

Requested to load SDXL

Loading 1 new model

[Fooocus Model Management] Moving model(s) has taken 2.62 seconds

100%|██████████████████████████████████████████████████████████████████████████████████| 30/30 [00:04<00:00, 6.36it/s]

Requested to load AutoencoderKL

Loading 1 new model

[Fooocus Model Management] Moving model(s) has taken 0.14 seconds

Image generated with private log at: S:\fooocus\Fooocus\outputs\2024-03-29\log.html

Generating and saving time: 8.16 seconds

[Sampler] refiner_swap_method = joint

[Sampler] sigma_min = 0.0291671771556139, sigma_max = 14.614643096923828

Requested to load SDXL

Loading 1 new model

[Fooocus Model Management] Moving model(s) has taken 1.23 seconds

100%|██████████████████████████████████████████████████████████████████████████████████| 30/30 [00:04<00:00, 6.29it/s]

Requested to load AutoencoderKL

Loading 1 new model

[Fooocus Model Management] Moving model(s) has taken 0.13 seconds

Image generated with private log at: S:\fooocus\Fooocus\outputs\2024-03-29\log.html

Generating and saving time: 6.73 seconds

Requested to load SDXLClipModel

Requested to load GPT2LMHeadModel

Loading 2 new models

Total time: 16.93 seconds

[Fooocus Model Management] Moving model(s) has taken 0.57 seconds -

válasz

Raymond

#43561

üzenetére

Raymond

#43561

üzenetére

SD-re felraktam a tensorRT kieget bekapcsolva 8 kikapcsolva 3

Nem tudom hogyan lehetne tesztelni rendesen.

Loading TensorRT engine: T:\AI\stable-diffusion-webui\models\Unet-trt\v1-5-pruned-emaonly_d7049739_cc70_sample=2x4x64x64-timesteps=2-encoder_hidden_states=2x77x768.trtLoaded Profile: 0sample = [(2, 4, 64, 64), (2, 4, 64, 64), (2, 4, 64, 64)]timesteps = [(2,), (2,), (2,)]encoder_hidden_states = [(2, 77, 768), (2, 77, 768), (2, 77, 768)]latent = [(2, 4, 64, 64), (2, 4, 64, 64), (2, 4, 64, 64)]100%|██████████████████████████████████████████████████████████████████████████████████| 20/20 [00:02<00:00, 7.84it/s]Total progress: 30it [07:30, 15.00s/it]100%|██████████████████████████████████████████████████████████████████████████████████| 20/20 [00:02<00:00, 7.71it/s]Total progress: 100%|██████████████████████████████████████████████████████████████████| 20/20 [00:02<00:00, 6.71it/s]100%|██████████████████████████████████████████████████████████████████████████████████| 20/20 [00:02<00:00, 7.30it/s]Total progress: 100%|██████████████████████████████████████████████████████████████████| 20/20 [00:02<00:00, 6.88it/s]Dectivating unet: [TRT] v1-5-pruned-emaonly████████████████████████████████████████████| 20/20 [00:02<00:00, 8.02it/s]100%|██████████████████████████████████████████████████████████████████████████████████| 20/20 [00:06<00:00, 2.93it/s]Total progress: 100%|██████████████████████████████████████████████████████████████████| 20/20 [00:06<00:00, 2.94it/s]Activating unet: [TRT] v1-5-pruned-emaonly█████████████████████████████████████████████| 20/20 [00:06<00:00, 3.01it/s]Loading TensorRT engine: T:\AI\stable-diffusion-webui\models\Unet-trt\v1-5-pruned-emaonly_d7049739_cc70_sample=2x4x64x64-timesteps=2-encoder_hidden_states=2x77x768.trtLoaded Profile: 0sample = [(2, 4, 64, 64), (2, 4, 64, 64), (2, 4, 64, 64)]timesteps = [(2,), (2,), (2,)]encoder_hidden_states = [(2, 77, 768), (2, 77, 768), (2, 77, 768)]latent = [(2, 4, 64, 64), (2, 4, 64, 64), (2, 4, 64, 64)]100%|██████████████████████████████████████████████████████████████████████████████████| 20/20 [00:02<00:00, 7.88it/s]Total progress: 100%|██████████████████████████████████████████████████████████████████| 20/20 [00:02<00:00, 6.85it/s]Total progress: 100%|██████████████████████████████████████████████████████████████████| 20/20 [00:02<00:00, 8.00it/s][ Szerkesztve ]

Vintage Story PH szervere újra fut!

Új hozzászólás Aktív témák

A topikban az OFF és minden egyéb, nem a témához kapcsolódó hozzászólás gyártása TILOS!

MIELŐTT LINKELNÉL VAGY KÉRDEZNÉL, MINDIG OLVASS KICSIT VISSZA!!

A topik témája:

Az NVIDIA éppen érkező, vagy jövőbeni új grafikus processzorainak kivesézése, lehetőleg minél inkább szakmai keretek között maradva. Architektúra, esélylatolgatás, érdekességek, spekulációk, stb.

- Újszerű - POWERCOLOR Radeon RX 5500 XT 8GB GDDR6 VGA videókártya

- Hibátlan - GIGABYTE GTX 1660Ti Windforce OC 6G 6GB GDDR6 VGA videókártya dobozos

- Hibátlan - PALIT GTX 1650 StormX 4GB GDDR5 VGA videókártya - tápcsatlakozó nélküli !!!

- ASUS ProArt GeForce RTX 4080 SUPER 16GB GDDR6X OC (ASUS-VC-PRO-RT4080S-O16G) Bontatlan új 3 év gar!

- XFX RX 6600 XT SPEEDSTER SWFT 210